UPDATE #2: To make sure everyone clearly recognizes my involvement with both papers, I provided Anthony suggested text and references for his article [I am not a co-author of the Watts et al paper], and am a co-author on the McNider et al paper.

UPDATE: There has been discussion as to whether the Time of Observation Bias (TOB) could affect the conclusions reached in Watts et al (2012). This is a valid concern. Thus the “Game Changing” finding of whether the trends are actually different for well- and poorly-sited locations is tenative until it is shown whether or not TOB alters the conclusions. The issue, however, is not easy to resolve. In our paper

Pielke Sr., R.A., T. Stohlgren, L. Schell, W. Parton, N. Doesken, K. Redmond, J. Moeny, T. McKee, and T.G.F. Kittel, 2002: Problems in evaluating regional and local trends in temperature: An example from eastern Colorado, USA. Int. J. Climatol., 22, 421-434.

this is what we concluded [highlight added]

The time of observation biases clearly are a problem in using raw data from the US Cooperative stations. Six stations used in this study have had documented changes in times of observation. Some stations, like Holly, have had numerous changes. Some of the largest impacts on monthly and seasonal temperature time series anywhere in the country are found in the Central Great Plains as a result of relatively frequent dramatic interdiurnal temperature changes. Time of observation adjustments are therefore essential prior to comparing long-term trends.

We attempted to apply the time of observation adjustments using the paper by Karl et al. (1986). The actual implementation of this procedure is very difficult, so, after several discussions with NCDC personnel familiar with the procedure, we chose instead to use the USHCN database to extract the time of observation adjustments applied by NCDC. We explored the time of observation bias and the impact on our results by taking the USHCN adjusted temperature data for 3 month seasons, and subtracted the seasonal means computed from the station data adjusted for all except time of observation changes in order to determine the magnitude of that adjustment. An example is shown here for Holly, Colorado (Figure 1), which had more changes than any other site used in the study.

What you would expect to see is a series of step function changes associated with known dates of time of observation changes. However, what you actually see is a combination of step changes and other variability, the causes of which are not all obvious. It appeared to us that editing procedures and procedures for estimating values for missing months resulted in computed monthly temperatures in the USHCN differing from what a user would compute for that same station from averaging the raw data from the Summary of the Day Cooperative Data Set. This simply points out that when manipulating and attempting to homogenize large data sets, changes can be made in an effort to improve the quality of the data set that may or may not actually accomplish the initial goal.

Overall, the impact of applying time of observation adjustment at Holly was to cool the data for the 1926–58 with respect to earlier and later periods. The magnitude of this adjustment of 2 °C is obviously very large, but it is consistent with changing from predominantly late afternoon observation times early in the record to early morning observation times in recent years in the part of the country where time of observation has the greatest effect. Time of observation adjustments were also applied at five other sites.

Until this issue is resolved, the Game Changer aspect of the Watts et al 2012 study is tenative. [Anthony reports he is actively working to resolve this issue on hold ]. The best way to address the TOB issue is to use data from sites in the Watts et al data set that have hourly resolution. For those years, when the station is unchanging in location, compute the TOB. The Karl et al (1986) method of TOB adjustment, in my view, needs to be more clearly defined and further examined in order to better address this issue. I understand research is underway to examine the TOB issue in detail, and results will be reported by Anthony when ready.

*************ORIGINAL POST****************

There are two recent papers that raise serious questions on the accuracy of the quantitative diagnosis of global warming by NCDC, GISS, CRU and BEST based on land surface temperature anomalies. These papers are a culmination of two areas of uncertainty study that were identified in the paper

Pielke Sr., R.A., C. Davey, D. Niyogi, S. Fall, J. Steinweg-Woods, K. Hubbard, X. Lin, M. Cai, Y.-K. Lim, H. Li, J. Nielsen-Gammon, K. Gallo, R. Hale, R. Mahmood, S. Foster, R.T. McNider, and P. Blanken, 2007: Unresolved issues with the assessment of multi-decadal global land surface temperature trends. J. Geophys. Res., 112, D24S08, doi:10.1029/2006JD008229.

The Summary

- One paper [Watts et al 2012] show that siting quality does matter. A warm bias results in the continental USA when poorly sited locations are used to construct a gridded analysis of land surface temperature anomalies.

- The other paper [McNider et al 2012] shows that not only does the height at which minimum temperature observations are made matter, but even slight changes in vertical mixing (such as from adding a small shed near the observation site, even in an otherwise pristine location) can increase the measured temperature at the height of the observation. This can occur when there is little or no layer averaged warming.

The Two Papers

Watts et al, 2012: An area and distance weighted analysis of the impacts of station exposure on the U.S. Historical Climatology Network temperatures and temperature trends [to be submitted to JGR]

McNider, R. T., G.J. Steeneveld, B. Holtslag, R. Pielke Sr, S. Mackaro, A. Pour Biazar, J. T. Walters, U. S. Nair, and J. R. Christy (2012). Response and sensitivity of the nocturnal boundary layer over land to added longwave radiative forcing, J. Geophys. Res.,doi:10.1029/2012JD017578, in press. [for the complete paper, click here]

To Provide Context

First, however, to make sure that my perspective on climate is properly understood;

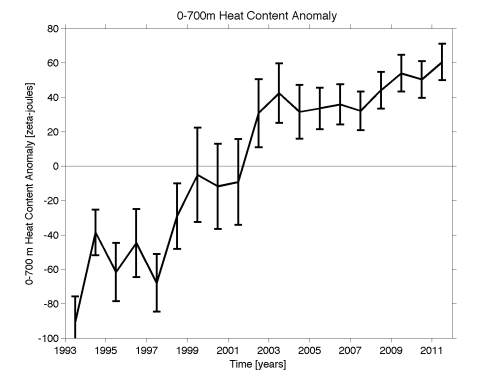

i) There has been global warming over the last several decades. The ocean is the component of the climate system that is best suited for quantifying climate system heat change [Pielke, 2003] e.g. see the figure below from NOAA’s Upper Ocean Heat Content Anomaly for their estimate of the magnitude of warming since 1993

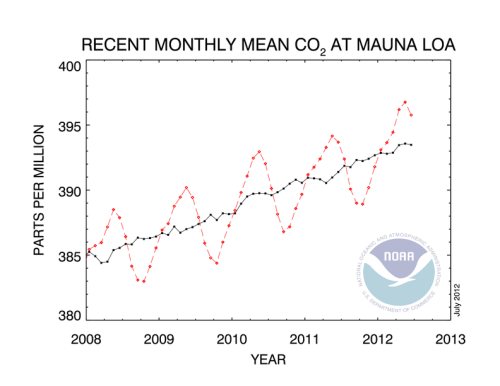

ii) The human addition to CO2 into the atmosphere is a first-order climate forcing; e.g. see Pielke et al (2009) and the NOAA plot below

However, the Watts et al 2012 and McNider et al 2012 papers refute a major assumption in the CCSP 1.1 report

Temperature Trends in the Lower Atmosphere – Understanding and Reconciling Differences

that variations in surface temperature anomalies are random and this can be averaged to create area means that are robust measures of the average surface temperature in that region (and when summed globally, provide an accurate global land average surface temperature anomaly). Randomness, and with assumption of no systematic biases, is shown in the two papers to be incorrect.

In the chapter

Lanzante et al 2005: What do observations indicate about the changes of temperatures in the atmosphere and at the surface since the advent of measuring temperatures vertically?

they write that [highlight added]

“Currently, there are three main groups creating global analyses of surface temperature (see Table 3.1), differing in the choice of available data that are utilized as well as the manner in which these data are synthesized.

My Comment: Now there is the addition of Richard Muller’s BEST analysis.

Since the network of surface stations changes over time, it is necessary to assess how well the available observations monitor global or regional temperature. There are three ways in which to make such assessments (Jones, 1995). The first is using “frozen grids” where analysis using only those grid boxes with data present in the sparsest years is used to compare to the full data set results from other years (e.g., Parker et al., 1994). The results generally indicate very small errors on multi-annual timescales (Jones, 1995). “

My Comment: The “frozen grids” combine data from poor- and well-site locations, and from different heights. A warm bias results. This is a similar type of analysis as used in BEST.

The second technique is sub-sampling a spatially complete field, such as model output, only where in situ observations are available. Again the errors are small (e.g., the standard errors are less than 0.06ºC for the observing period 1880 to 1990; Peterson et al., 1998b).

My Comment: Again, there is the assumption that no systematic biases exist in the observations. Poorly sited locations are blended with well-sited locations which, based on Watts et al (2012), artificially elevates the sub-sampled trends.

The third technique is comparing optimum averaging, which fills in the spatial field using covariance matrices, eigenfunctions or structure functions, with other analyses. Again, very small differences are found (Smith et al., 2005). The fidelity of the surface temperature record is further supported by work such as Peterson et al. (1999) which found that a rural subset of global land stations had almost the same global trend as the full network and Parker (2004) that found no signs of urban warming over the period covered by this report.

My Comment: Here is where the assumption that the set of temperature anomalies are random is presented. Watts et al (2012) provide observational evidence, and McNider et al (2012) present theoretical reasons, why this is an incorrect assumption.

Since the three chosen data sets utilize many of the same raw observations, there is a degree of interdependence. Nevertheless, there are some differences among them as to which observing sites are utilized. An important advantage of surface data is the fact that at any given time there are thousands of thermometers in use that contribute to a global or other large-scale average. Besides the tendency to cancel random errors, the large number of stations also greatly facilitates temporal homogenization since a given station may have several “near-neighbors” for “buddy-checks.” While there are fundamental differences in the methodology used to create the surface data sets, the differing techniques with the same data produce almost the same results (Vose et al., 2005a).

My Comment: There statement that there is “the tendency to cancel random errors” is shown in the Watts et al 2012 and McNider et al 2012 papers to be incorrect. This means their claim that “the large number of stations also greatly facilitates temporal homogenization since a given station may have several “near-neighbors” for “buddy-checks.” is erroneously averaging together sites with a warm bias.

Bottom Line Conclusion: The Watts et al 2012 and McNider et al 2012 papers have presented the climate community with evidence of major systematic warm biases in the analysis of multi-decadal land surface temperature anomalies by NCDC, GISS, CRU and BEST. The two paper also help explain the discrepancy seen between the multi-decadal temperature trends in the surface and lower tropospheric temperature that was documented in

Klotzbach, P.J., R.A. Pielke Sr., R.A. Pielke Jr., J.R. Christy, and R.T. McNider, 2009: An alternative explanation for differential temperature trends at the surface and in the lower troposphere. J. Geophys. Res., 114, D21102, doi:10.1029/2009JD011841.

Klotzbach, P.J., R.A. Pielke Sr., R.A. Pielke Jr., J.R. Christy, and R.T. McNider, 2010: Correction to: “An alternative explanation for differential temperature trends at the surface and in the lower troposphere. J. Geophys. Res., 114, D21102, doi:10.1029/2009JD011841″, J. Geophys. Res., 115, D1, doi:10.1029/2009JD013655.

I look forward to discussing the conclusions of these two studies in the coming weeks and months.