There is a new and, in my view, scientifically flawed report published by the National Research Council. The report is

A National Strategy for Advancing Climate Modeling

I have a few comments on this report in my post today which document its failings. First, the overarching perspective of the authors of the NRC report is [highlight added]

As climate change has pushed climate patterns outside of historic norms, the need for detailed projections is growing across all sectors, including agriculture, insurance, and emergency preparedness planning. A National Strategy for Advancing Climate Modeling emphasizes the needs for climate models to evolve substantially in order to deliver climate projections at the scale and level of detail desired by decision makers, this report finds. Despite much recent progress in developing reliable climate models, there are still efficiencies to be gained across the large and diverse U.S. climate modeling community.

My Comment:

First, their statement that “….climate change has pushed climate patterns outside of historic norms” is quite a convoluted statement. Climate has always been changing. This insertion of “climate change” clearly is a misuse of the terminology “climate change” as I discussed in the post

The Need For Precise Definitions In Climate Science – The Misuse Of The Terminology “Climate Change”

Second, there are no reliable climate model predictions on multi-decadal time scale! This is clearly documented in the posts; e.g. see

More CMIP5 Regional Model Shortcomings

CMIP5 Climate Model Runs – A Scientifically Flawed Approach

The NRC Report also writes

Over the next several decades, climate change and its myriad consequences will be further unfolding and possibly accelerating, increasing the demand for climate information. Society will need to respond and adapt to impacts, such as sea level rise, a seasonally ice-free Arctic, and large-scale ecosystem changes. Historical records are no longer likely to be reliable predictors of future events; climate change will affect the likelihood and severity of extreme weather and climate events, which are a leading cause of economic and human losses with total losses in the hundreds of billions of dollars over the past few decades.

My Comment:

As I wrote earlier in this post, the multi-decadal climate model predictions have failed to skillfully predict not only changes in climate statistics over the past few decades, but cannot even accurately enough simulate the time averaged regional climates! Moreover, in terms of the comment that

“…climate change will affect the likelihood and severity of extreme weather and climate events, which are a leading cause of economic and human losses with total losses in the hundreds of billions of dollars over the past few decades.”

this is yet another example of where the BS meter is sounding off! See, for example, my son’s most recent discussion of this failing by the this climate community;

The NRC report continues

Computer models that simulate the climate are an integral part of providing climate information, in particular for future changes in the climate. Overall, climate modeling has made enormous progress in the past several decades, but meeting the information needs of users will require further advances in the coming decades.

They also write that

Climate models skillfully reproduce important, global-to-continental-scale features of the present climate, including the simulated seasonal-mean surface air temperature (within 3°C of observed (IPCC, 2007c), compared to an annual cycle that can exceed 50°C in places), the simulated seasonal-mean precipitation (typical errors are 50% or less on regional scales of 1000 km or larger that are well resolved by these models [Pincus et al., 2008]), and representations of major climate features such as major ocean current systems like the Gulf Stream (IPCC, 2007c) or the swings in Pacific sea-surface temperature, winds and rainfall associated with El Niño (AchutaRao and Sperber, 2006; Neale et al., 2008). Climate modeling also delivers useful forecasts for some phenomena from a month to several seasons ahead, such as seasonal flood risks.

My Comment: Actually “climate modeling” has made little progress in simulating regional climate on multi-decadal time scales, and no demonstrated evidence of being able to skillfully predict changes in the climate system. Indeed, the most robust work are the peer-reviewed papers that are in my posts (as I also listed earlier in this post)

More CMIP5 Regional Model Shortcomings

CMIP5 Climate Model Runs – A Scientifically Flawed Approach

which document the lack of skill in the models.

The report also defines “climate” as

Climate is conventionally defined as the long-term statistics of the weather (e.g., temperature, precipitation, and other meteorological conditions) that characteristically prevail in a particular region.

Readers of my weblog should know that this is an inappropriately narrow definition of climate. In the NRC report

National Research Council, 2005: Radiative forcing of climate change: Expanding the concept and addressing uncertainties. Committee on Radiative Forcing Effects on Climate Change, Climate Research Committee, Board on Atmospheric Sciences and Climate, Division on Earth and Life Studies, The National Academies Press, Washington, D.C., 208 pp.

(which the new NRC report conveniently ignored), climate is defined as

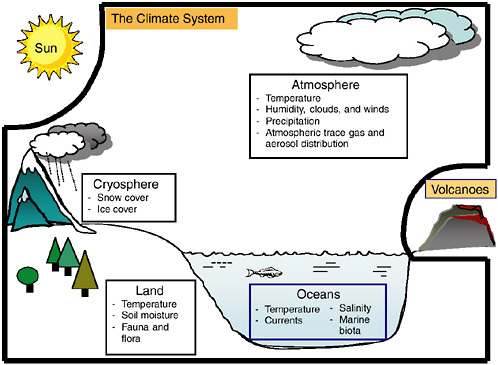

The system consisting of the atmosphere, hydrosphere, lithosphere, and biosphere, determining the Earth’s climate as the result of mutual interactions and responses to external influences (forcing). Physical, chemical, and biological processes are involved in interactions among the components of the climate system.

FIGURE 1-1 The climate system, consisting of the atmosphere, oceans, land, and cryosphere. Important state variables for each sphere of the climate system are listed in the boxes. For the purposes of this report, the Sun, volcanic emissions, and human-caused emissions of greenhouse gases and changes to the land surface are considered external to the climate system (from NRC, 2005)

This new NRC report “A National Strategy for Advancing Climate Modeling” misrepresents the capabilities of the climate models to simulate the climate system on multi-decadal time periods.

While I am in support of studies that assess the predictability skill of the models and to use them for monthly and seasonal predictions (which can be quickly tested against observations), seeking to advance climate modeling by claiming that more accurate multi-decadal regional forecasts can be made for policymakers and impact scientists and engineer with their proposed approach is, in my view, a dishonest communication to policymakers and to the public.

This need for advanced climate modeling should be promoted only and specifically with respect to assessing predictability on monthly,seasonal and longer time scales, not to making multi-decadal predictions for the impacts communties.