As I have posted on many times; e.g. see

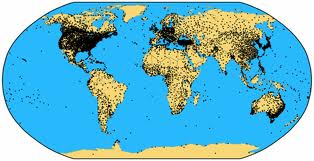

there is an enormous amount of money being spent to provide multi-decadal regional climate forecasts to the impacts communities. In this post, I select just a few quotes from peer reviewed papers to document that the climate models do not have this skill. There are more detailed on this post also (e.g. see).

As the first example, from

Dawson A., T. N. Palmer and S. Corti: 2012: Simulating Regime Structures in Weather and Climate Prediction Models. Geophyscial Research Letters. doi:10.1029/2012GL053284 In press.

We have shown that a low resolution atmospheric model, with horizontal resolution typical of CMIP5 models, is not capable of simulating the statistically significant regimes seen in reanalysis, …….It is therefore likely that the embedded regional model may represent an unrealistic realization of regional climate and variability.

Other examples, include

Taylor et al, 2012: Afternoon rain more likely over drier soils. Nature. doi:10.1038/nature11377. Received 19 March 2012 Accepted 29 June 2012 Published online 12 September 2012

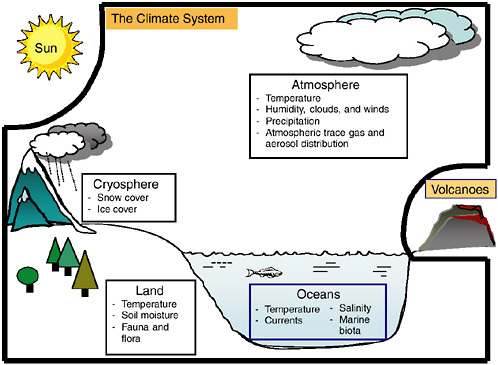

“…the erroneous sensitivity of convection schemes demonstrated here is likely to contribute to a tendency for large-scale models to `lock-in’ dry conditions, extending droughts unrealistically, and potentially exaggerating the role of soil moisture feedbacks in the climate system.”

Driscoll, S., A. Bozzo, L. J. Gray, A. Robock, and G. Stenchikov (2012), Coupled Model Intercomparison Project 5 (CMIP5) simulations of climate following volcanic eruptions, J. Geophys. Res., 117, D17105, doi:10.1029/2012JD017607. published 6 September 2012.

The study confirms previous similar evaluations and raises concern for the ability of current climate models to simulate the response of a major mode of global circulation variability to external forcings.

Fyfe, J. C., W. J. Merryfield, V. Kharin, G. J. Boer, W.-S. Lee, and K. von Salzen (2011), Skillful predictions of decadal trends in global mean surface temperature, Geophys. Res. Lett.,38, L22801, doi:10.1029/2011GL049508

”….for longer term decadal hindcasts a linear trend correction may be required if the model does not reproduce long-term trends. For this reason, we correct for systematic long-term trend biases.”

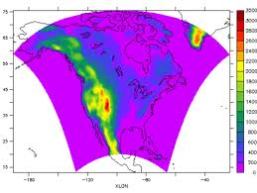

Xu, Zhongfeng and Zong-Liang Yang, 2012: An improved dynamical downscaling method with GCM bias corrections and its validation with 30 years of climate simulations. Journal of Climate 2012 doi: http://dx.doi.org/10.1175/JCLI-D-12-00005.1

”…the traditional dynamic downscaling (TDD) [i.e. without tuning) overestimates precipitation by 0.5-1.5 mm d-1…..The 2-year return level of summer daily maximum temperature simulated by the TDD is underestimated by 2-6°C over the central United States-Canada region.”

Anagnostopoulos, G. G., Koutsoyiannis, D., Christofides, A., Efstratiadis, A. & Mamassis, N. (2010) A comparison of local and aggregated climate model outputs with observed data. Hydrol. Sci. J. 55(7), 1094–1110

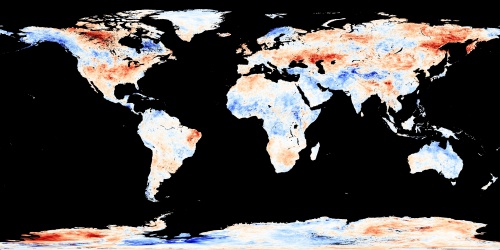

“…. local projections do not correlate well with observed measurements. Furthermore, we found that the correlation at a large spatial scale, i.e. the contiguous USA, is worse than at the local scale.”

Stephens, G. L., T. L’Ecuyer, R. Forbes, A. Gettlemen, J.‐C. Golaz, A. Bodas‐Salcedo, K. Suzuki, P. Gabriel, and J. Haynes (2010), Dreary state of precipitation in global models, J. Geophys. Res., 115, D24211, doi:10.1029/2010JD014532.

“…models produce precipitation approximately twice as often as that observed and make rainfall far too lightly…..The differences in the character of model precipitation are systemic and have a number of important implications for modeling the coupled Earth system …….little skill in precipitation [is] calculated at individual grid points, and thus applications involving downscaling of grid point precipitation to yet even finer‐scale resolution has little foundation and relevance to the real Earth system.”

Sun, Z., J. Liu, X. Zeng, and H. Liang (2012), Parameterization of instantaneous global horizontal irradiance at the surface. Part II: Cloudy-sky component, J. Geophys. Res., doi:10.1029/2012JD017557, in press.

“Radiation calculations in global numerical weather prediction (NWP) and climate models are usually performed in 3-hourly time intervals in order to reduce the computational cost. This treatment can lead to an incorrect Global Horizontal Irradiance (GHI) at the Earth’s surface, which could be one of the error sources in modelled convection and precipitation. …… An important application of the scheme is in global climate models….It is found that these errors are very large, exceeding 800 W m-2 at many non-radiation time steps due to ignoring the effects of clouds….”

Ronald van Haren, Geert Jan van Oldenborgh, Geert Lenderink, Matthew Collins and Wilco Hazeleger, 2012: SST and circulation trend biases cause an underestimation of European precipitation trends Climate Dynamics 2012, DOI: 10.1007/s00382-012-1401-5

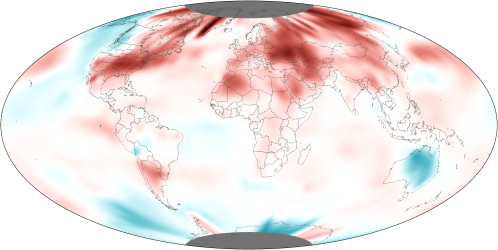

“To conclude, modeled atmospheric circulation and SST trends over the past century are significantly different from the observed ones. These mismatches are responsible for a large part of the misrepresentation of precipitation trends in climate models. The causes of the large trends in atmospheric circulation and summer SST are not known.”

As reported in

Kundzewicz, Z. W., and E.Z. Stakhiv (2010) Are climate models “ready for prime time” in water resources managementapplications, or is more research needed? Editorial. Hydrol. Sci. J. 55(7), 1085–1089.

they conclude that

“Simply put, the current suite of climate models were not developed to provide the level of accuracy required for adaptation-type analysis.”

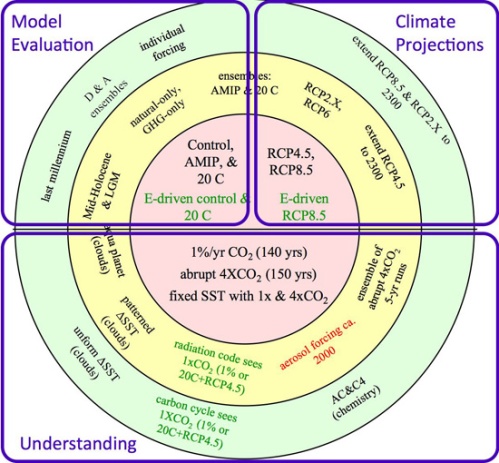

Unless the NSF, Linda Mearns and her co-authors, ect can refute these peer reviewed findings, if they continue to ignore these studies and persist in presenting their multi-decadal climate predictions to the impacts communities, they are failing to serve as objective scientists. I wholeheartedly endorse the assessment of multi-decadal predictability. The papers I list earlier in this post as excellent examples of quality science in this context

However, providing predictions (i.e. projections/forecasts) to the impacts communities and policymakers, in which they are claimed to be skillful, is not a robust scientific endeavor.

I also add, this issue is independent of the debate as to the importance of CO2, and other human climate forcings, on the regional climate in coming decades. It means, however, that providing regional multi-decadal predictions is not only without a demonstrated skill, but is misleading the impact and policy communities as to what are the actual risks that we face.